NVIDIA's Nemotron 70B: A New Challenger for GPT-4o?

NVIDIA has surprised the AI community with the Nemotron 70B model. We at Evoya are intensively testing the model and will soon make it available to our customers.

NVIDIA's Nemotron 70B: A New Challenger for GPT-4o?

NVIDIA Nemotron 70B: A New Challenger for GPT-4o?

Yesterday (October 16, 2024), NVIDIA surprisingly released the Nemotron 70B model, causing quite a stir in the AI community. Without much fanfare, but with all the more substance, NVIDIA has brought a medium-sized model to market that is already making waves. The release on the Hugging Face platform quickly attracted attention, as the first impressions are extremely promising. Nemotron 70B could be the new star in the AI sky that penetrates the domains of much larger language models with "only" 70 billion parameters.

Benchmark King? First Impressions of Nemotron 70B

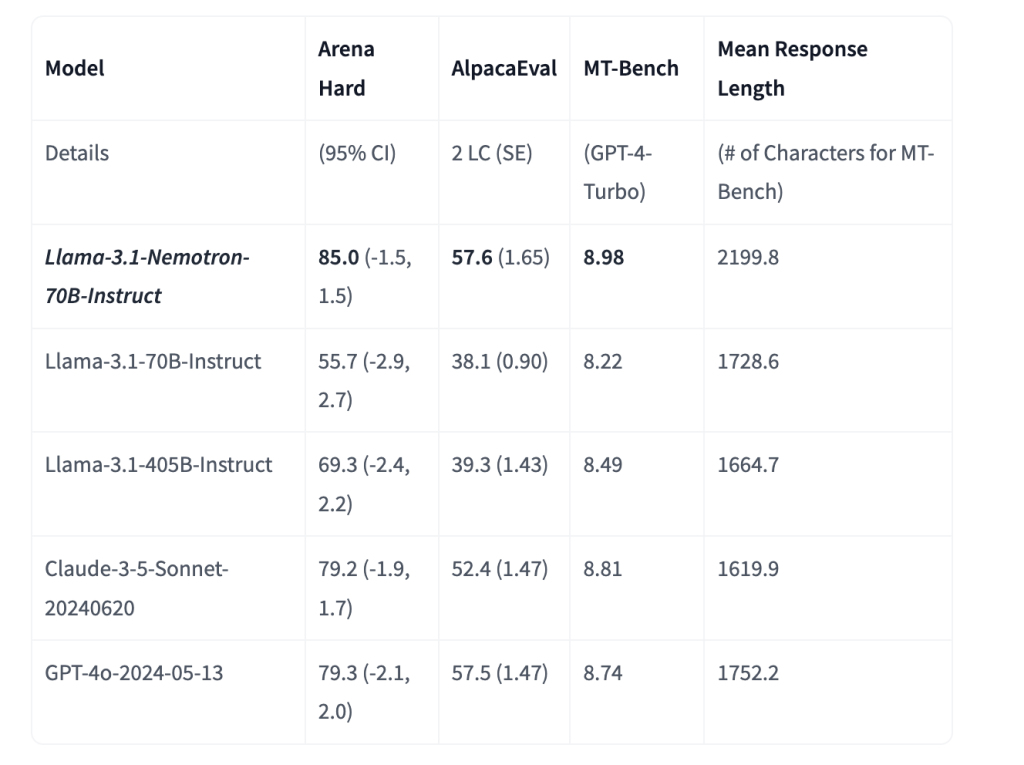

The first benchmark results from Nemotron 70B are impressive and noteworthy.

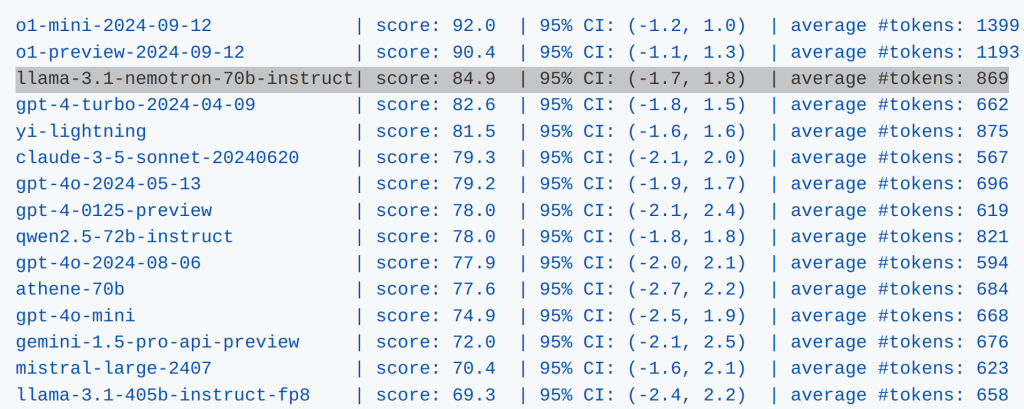

With a score of 84.9 on the Arena Hard Benchmark, it surpasses previous leaders like OpenAI's GPT-4o:

Interestingly, Nemotron with 70 billion parameters and based on Llama 3.1 70B also easily beats its big brother, the Llama 3.1 model with 403 billion parameters.

However, before we draw hasty conclusions, caution is advised: These results are fresh and will certainly be examined more closely in the coming days. In certain places, it is suspected that benchmark optimization may have been employed here. The AI community will eagerly observe whether Nemotron 70B can confirm its promising performance in practice as well.

Meta's Llama 3: The Foundation for NVIDIA's Success

NVIDIA has relied on Meta's proven Llama 3 models in developing Nemotron 70B. These open models provide a solid foundation that NVIDIA has further refined with advanced techniques such as Reinforcement Learning from Human Feedback (RLHF). This method enables the model to learn from human preferences and thereby provide more natural and contextually appropriate responses. Through this refinement, NVIDIA has created a model that is not only powerful but also adaptable.

The Special Features of Nemotron: What's Under the Hood?

With 70 billion parameters, Nemotron 70B is relatively compact, making it ideal for deployment on private servers. The open-source nature of the model allows developers and companies to customize and optimize it according to their needs. This flexibility, combined with the ability to operate the model on-premise, makes Nemotron 70B a potential game-changer in the AI landscape. It offers a cost-effective and powerful alternative to the large, proprietary models.

Game-Changer Potential: What Does This Mean for the AI Landscape?

The ability to operate Nemotron 70B on-premise could change how companies deploy AI models. The open-source nature of the model allows for the development of tailored solutions that meet specific requirements. For many companies, on-premise AI is a necessity as they must maintain control over their data – especially in areas like personal data, which is strictly regulated by law. Nemotron 70B offers a powerful and flexible alternative to the large, proprietary models that are often associated with high costs and restrictions.

Evoya's Use of Nemotron: Our Plans and Expectations

At Evoya, we are excited about the possibilities that Nemotron 70B offers. With its combination of performance, flexibility, and open-source accessibility, it provides an attractive alternative to established, proprietary models.

In the coming days, we will intensively test the model to evaluate its performance and adaptability in real-world applications. Subsequently, Nemotron will be available to our customers in their Evoya AI workspace. We are convinced that Nemotron will provide valuable services across various industries, from customer service to data analysis.