On-premise solutions

Avoid data outflow with our on-premise solutions for SMEs

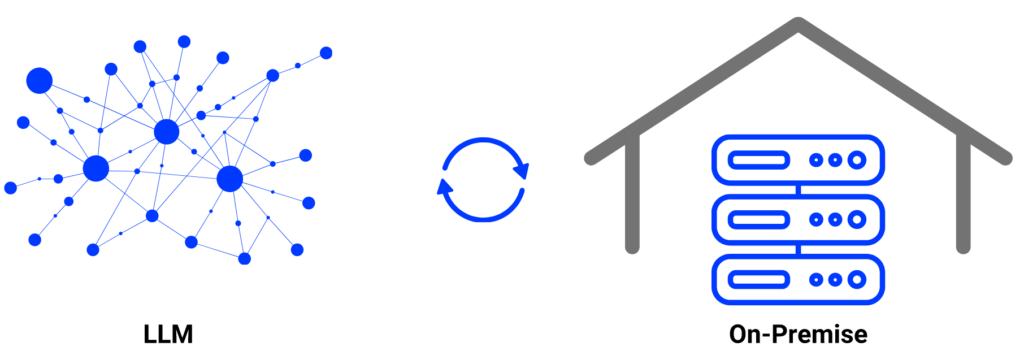

What does on-premise mean?

language models (LLM) on your servers, giving you full control over your data.

An on-premise solution is a software or service that is installed and managed directly on a company’s internal servers and infrastructure instead of being provided via the cloud.

In the context of AI, this would mean that a language model (LLM) would run on your SME’s own servers.

Advantages for your SME

At a time when data security and control are crucial, our on-premise solutions guarantee that your critical data is managed in-house.

Your data remains securely in your network without migrating to external cloud services.

Adherence to compliance guidelines through increased security and data protection measures.

Customization and seamless integration offer more flexibility for business requirements.

Language models on your own infrastructure

The use of language models on our own infrastructure strengthens data control and security, enables precise adaptation to company-specific requirements and supports compliance.

This approach not only promotes flexibility in adaptations and integrations, but also strengthens the relationship of trust with stakeholders through increased data protection and independent data processing.

Needs analysis and Strategy development

In order to create a solid foundation for successful implementation, the first step is to carry out a needs analysis and develop a strategy.

We assess your specific business needs (including an ROI calculation) and develop a customized strategy for the implementation of language models on your infrastructure.

Technical architectural planning

In the next step, we focus on the technical architecture planning.

We design a detailed technical architecture that ensures that the language model can be seamlessly integrated into your existing IT landscape.

Implementation

After the planning and analysis phase, the implementation begins and we work closely with your IT team. We install and configure the customized language model on your infrastructure to ensure optimal integration and alignment with your business requirements. This phase is characterized by our focus on efficiency and a smooth implementation, supported by close cooperation with your team.