The new Llama 4 model family: a quantum leap in open AI

Meta has made significant progress in the field of open AI models with the release of the Llama 4 model family. The new models not only surpass their predecessors in terms of performance and capabilities, but also set new standards in the AI landscape with a revolutionary 10 million token context window.

Overview: The Llama 4 model family

With Llama 4, Meta has introduced not just a single model, but an entire family of specialized AI models:

- Llama 4 Scout: The compact 17B model with 10 million token context window

- Llama 4 Maverick: The versatile 400B model with 1 million token context window

- Llama 4 Behemoth: The powerful 2T model (currently still being trained)

The three variants in detail

Llama 4 Scout (17B)

Scout is the most compact model in the family and has been optimized for applications where efficiency and speed are paramount:

- 17 billion parameters

- Optimized for edge devices and resource-constrained environments

- Ideal for real-time applications and mobile devices

- Supports context windows of up to 10M. tokens

Llama4 Scout is the first publicly available model with such an extensive context window and opens up completely new application possibilities, from the analysis of entire books to the processing of complex scientific documents.

Llama 4 Maverick (400B)

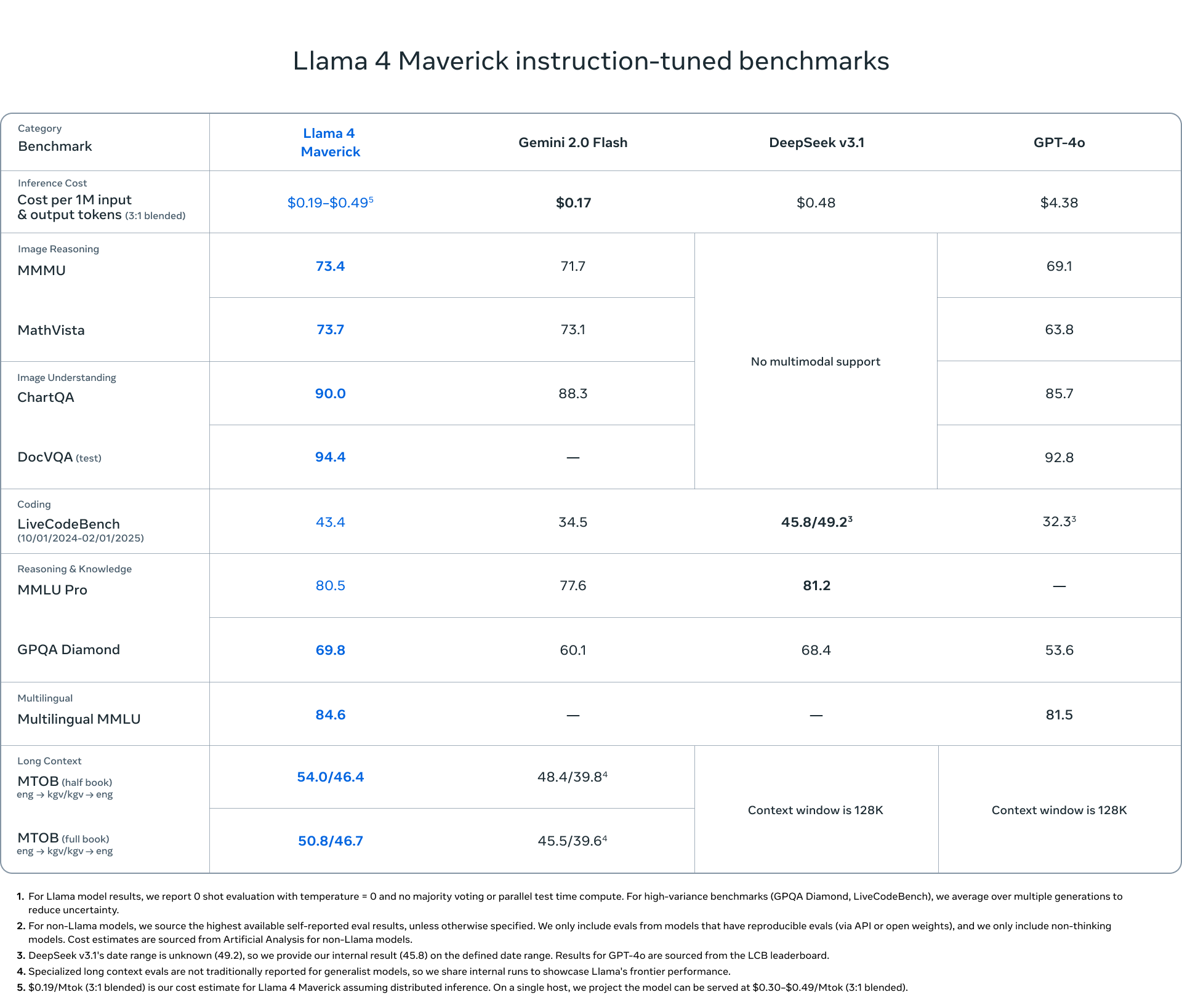

Maverick positions itself as a versatile all-round model with impressive performance:

- 400 billion parameters

- Balanced relationship between performance and resource requirements

- Excellent skills in complex reasoning tasks

- Supports context windows of up to 1M tokens

In our initial tests at Evoya AI, Maverick has proven to be extremely versatile, delivering results comparable to or better than proprietary models such as GPT-4o in many areas.

Llama 4 Behemoth (2T)

Behemoth is the flagship of the Llama 4 family and sets new standards with its enormous context window:

- 2 trillion parameters

- Outstanding performance for complex tasks and long contexts

- Multimodal capabilities for text, code and images

Not much is yet known about Llama 4 Behemoth, as the model is still in training and has not yet been released. It will be interesting to see.

Technical innovations in Llama 4

The Llama 4 model family features several technical innovations:

- Improved architecture: Optimized Transformer architecture with more efficient attention mechanisms

- Advanced training: New training methods that significantly reduce the hallucination rate

- Multimodal skills: Integration of text, code and image understanding in one model

The importance of the 10 million token context window

Llama 4 Scout’s 10 million token context window is more than just an impressive number – it’s a paradigm shift for AI applications:

- Processing entire books or scientific papers in one go

- Analysis of large codebases without loss of information

- Long-term memory for complex conversations

- Processing and analyzing large data sets in context

For comparison: GPT-4o offers a context window of 128K tokens, while Claude 3 Opus has 200K tokens. With 10 million tokens, Llama 4 Scout exceeds these values many times over and thus enables completely new application scenarios.

In our initial tests with Evoya AI, we have already observed how the large context window significantly improves the quality and coherence of answers to complex tasks. The ability to take large amounts of context into account leads to more precise and nuanced results.

Meta’s open source strategy and EU licensing

An important aspect of the Llama 4 release is Meta’s continuation of its open source strategy, but with some notable changes:

- The models are published under a modified open source license

- Commercial use is permitted under certain conditions

- Restrictions for EU users: Due to regulatory uncertainties in connection with the AI Act, the models can only be used to a limited extent in the EU

The EU licensing restrictions pose a significant challenge for European companies. Meta is responding to the uncertainties surrounding the EU AI Act, which underlines the complexity of the regulatory landscape for AI in Europe.

Implications for the open source community

The release of Llama 4 has far-reaching implications for the open source AI community:

- Democratization of advanced AI technologies

- Promoting innovation through open access to state-of-the-art models – Llama 3 was already a game changer here

- Challenging proprietary models with powerful open alternatives

For smaller companies and start-ups in particular, the Llama 4 models open up new opportunities to develop advanced AI applications without having to rely on proprietary services.

At Evoya AI, we have already started integrating the Llama 4 models into our workspace and testing the first use cases. The results are promising and show the enormous potential of this new model family.

Llama 4 soon available at Evoya AI

With the Llama 4 model family, Meta has taken a significant step in the development of open AI models. The combination of powerful models in various sizes, the revolutionary 10 million token context window and open licensing (albeit with restrictions in the EU) will have a lasting impact on the AI landscape.

For companies and developers, the Llama 4 models offer an attractive alternative to proprietary services and open up new possibilities for innovative applications. However, the EU licensing restrictions pose a challenge that underlines the complexity of the regulatory landscape for AI in Europe.

At Evoya AI, we will continue to test the Llama 4 models and integrate them into our workspace to provide our customers with access to the latest developments in open AI. The future of AI remains dynamic – and with Llama 4, Meta has made another important contribution to this future.